In the context of this article, when we talk about generative assistants, we’re not just referring to chatbots that answer customer questions or basic FAQ systems. Rather, we’re carefully examining a structured way for defining, creating, and assessing AI systems—the tasks they can do (how complex they are) and the technology they use (how advanced they are).

Over time, this method has grown into a framework that clarifies what it means to progress from a very simple assistant to something that verges on full autonomy or even the beginnings of artificial general intelligence (AGI). This article walks through that entire landscape, with detailed examples and case studies along the way, outlining a reusable and stable formula that can quantify the power of an assistant.

Introducing Two-Dimensional Classification

In the previous article, one of the main ideas we looked at was that an AI assistant can be classified into two different categories. A little recap:

- Class Number measures or determines functional complexity of an assistant. It shows the range and depth of tasks the assistant can do, as well as how well it understands context, learns, interacts, and makes decisions.

- Level Letter on the other hand refers to technology task complexity, from basic text prompts to advanced AI combined with robotics or quantum computers.

It’s important as we proceed to see these aspects as working together rather than against each other. You could have an assistant that is functionally sophisticated (because it carries out complex or context-heavy tasks) but relies on a relatively simple tech stack. On the other hand, you could have a simple assistant from a functional perspective but place it on a large-scale distributed infrastructure (which may or may not be practical, as we’ll see later). By combining a class (1 to 5) with a level (A to E), you get a clear perspective on what an assistant does and how it does it.

Why Choose This Method?

Using this method helps to prevent confusion by isolating “What are the AI’s capabilities?” from “What technology is it built on?”. It is also very important in identifying or better still highlighting when a system might be overengineered (a simple functional class using an extremely complex level) or underpowered (a high functional class stuck at an inadequate technology level), which will help identify overuse/waste of resources or pinpoint why an

assistant is severely underperforming. Most importantly, this complexity combination is a great way to chart a path for evolving your assistant—either through incrementally raising functional complexity (class) or improving the tech stack (level), or both. To see the breakdown of the letter and number functionalities, read the previous article here.

Possible vs. Impossible Combinations

Just because I said that all generative assistants at their core are made up of class and level does not mean that you can create any assistant by combining any class and level together. You may think that the two directions (class and level) are separate, but the reality is that some combinations work well together while others do not. One of the major insights in this framework is that not all class-level combinations make sense. Sometimes, you’d have a mismatch where you’re pairing a trivial function (Class 1) with an extremely advanced tech stack (Level E), or you have an expert-level function (Class 4) but only a simple prompt-based system (Level A). I have taken the liberty to breakdown some examples of which combinations are viable and which are simply not.

Possible Combinations

- Class 1: Pairs well with Level A or Level B. Level A, like a basic FAQ, no coding. Level B if you want some minimal scripting logic.

- Class 2: Usually suits Level A, B, or C. It might be a step up from single-step tasks to multi-step tasks and it could incorporate some machine learning at Level C.

- Class 3: Works with Level B (for advanced scripting) but more likely Level C or D to handle deeper ML or some distributed computing if tasks are large-scale. An example here is a chatbot that integrates user behavior data and has real-time logic, and it could be labeled Class 3 Level C or D.

- Class 4 tends: to require Level C or D. Needs advanced ML and potentially distributed systems for real-time decision-making. A near-real-time healthcare assistant or an enterprise AI system is a good example of this.

- Class 5: Connects best with Level D or E, reflecting near-human autonomy, advanced ML, IoT, or even quantum computing. A system that monitors an entire city’s infrastructure with minimal human supervision might be Class 5 Level D. A drone fleet with quantum-inspired algorithms for route optimization might be Class 5 Level E. I hoope you get the gist.

Impossible or Illogical Combinations

- Class 1 with Levels C, D, and E: Overkill. You don’t need large-scale machine learning or quantum computing for a single-step FAQ.

- Class 2 with Level D, E: Wasted resources—an intermediate-level assistant can’t leverage advanced distributed systems or robotics effectively.

- Class 3 with Level A: Not feasible, since advanced tasks and context awareness can’t be supported by a text-based, no-code prompt.

- Class 4 with Level A or B: Expert tasks with advanced reasoning or real-time data analysis can’t run on a system that’s limited to simple prompts or basic scripting.

- Class 5 with Level A or B: Near-human cognitive operations require a substantial tech backbone. Basic text prompts or simple scripting can’t handle the complexity or autonomy of Class 5 demands.

The bottom line here is this: each functional tier (class) must be paired with a realistic technology level (level). If either side is too high or low relative to the other, you get an inefficient, underutilized system or an outright impossibility.

Formulas for Quantifying “Power”

How do we measure a generative assistant’s overall power beyond “low, medium, or high”? The answer to this has been a waking nightmare for me for months, and as I am used to, I decided to break the problem into dust particles and simplify it further. And doing this, I realised that we can assign numeric scores and combine them into a single value P,and from this, two main formulas can be observed.

Simple Multiplicative Formula

P=C×Lt

- C= class complexity; typically a numeric representation of how advanced the assistant is across different dimensions (task, context, learning, interaction, autonomy).

- Lt = Technology Level, where A=1, B=2, C=3, D=4, E=5.

If an assistant is sufficiently advanced in functionality (Class 3 or 4) and also uses a robust tech stack (maybe Level D), P (power level) becomes very high. Conversely, a basic system with minimal functionality (Class 1) and the simplest level (A) yields a low P score.

This formula should be used in early-stage comparisons when you want a quick snapshot of your assistant’s overall power. Also, if you don’t have any need for granularity and you’re fine with an aggregate measure for your assistant (without weighting each complexity type individually), this works fine as well.

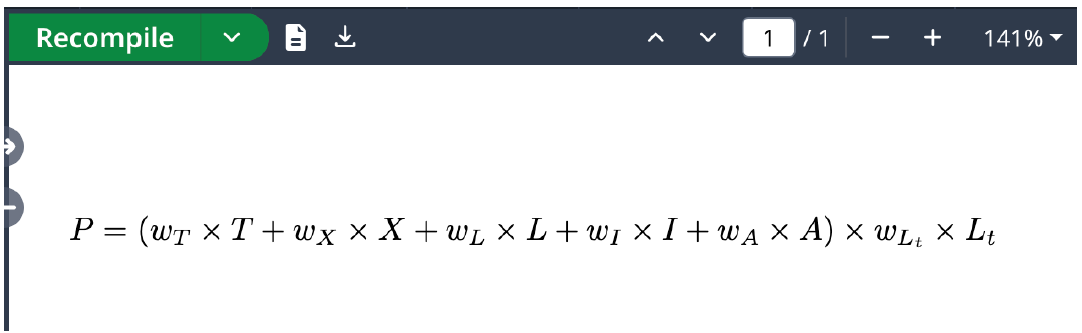

Weighted Formula

Here:

- T, X, L, I, A represent numeric scores for task, contextual, learning, interaction, and autonomy complexities.

- wT , wX , wL, wI , wA are weights that let you place more or less importance on each aspect.

- Lt is still the numeric representation of the technology level, and wLt is the weight you assign to the technology’s significance overall.

When to use:

- Detailed Evaluation: You have certain priorities (maybe user interaction is crucial, so you want to weigh interaction complexity higher than the rest).

- Industry-Specific Needs: Some industries care about autonomy more than user interaction, or vice versa.

- Optimization: If you’re deciding where to invest resources—either through boosting your system’s autonomy or its learning abilities—the weighted approach guides you to focus on the most impactful factor for your usecase.

For Example:

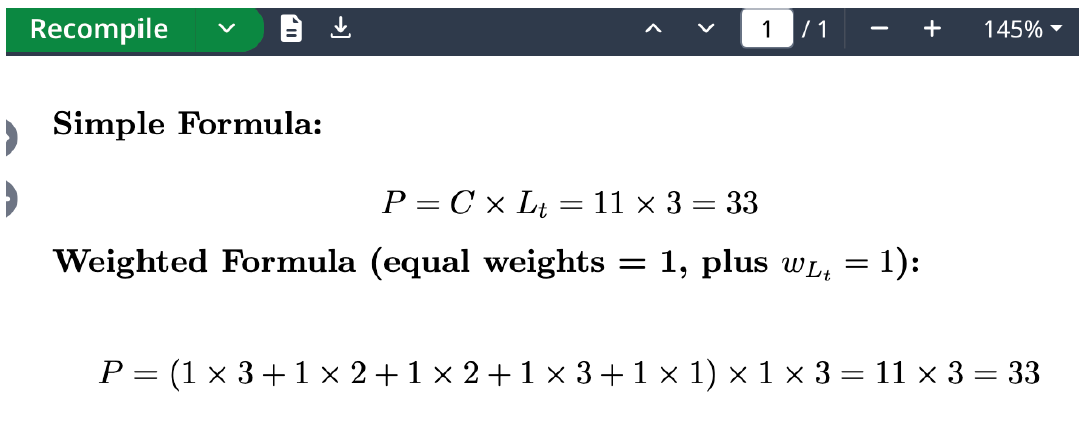

Let’s leave theory for a moment and try to run an example. Let’s say you have:

- T = 3 (Multi-step tasks)

- X = 2 (Basic context awareness)

- L = 2 (Basic learning)

- I = 3 (Multi-modal interaction)

- A = 1 (Manual operation)

- Summed up, C=3+2+2+3+1=11

- Lt=3 (Level C, meaning ML + API Integration)

Put simply, it would look like this:

Application of Formulas

Power Formula

- Great for quick cross-comparisons or early-phase prototypes of assistants.

- The limitation is that it doesn’t differentiate which dimension matters the most and treats all complexities the same.

Weighted Formula

- Great for: domains where certain complexities are critical. For instance, if you’re building an assistant for mental health counseling, you might weigh contextual understanding and interaction complexity very highly, while autonomy might be restricted. I once saw a movie where artificial intelligence was connected to the internet, and it gained all of humanity’s knowledge. It was asked to provide a solution to global warming and the climate disaster, and the AGI decided that the solution to the earth’s crisis was to eliminate humanity. So we really don’t want it to go off and start advising patients to jump off balconies, hence the autonomy restriction.

- The limitation for this is that it requires more time to think about weighting, which can be subjective.

Why Some Industries Need Different Weights

Consider healthcare vs. e-commerce:

- Healthcare would probably prioritize accuracy, context awareness, and reliability above all other kinds of factors. Here there would be increased weights on categories like task and contextual complexity. Interaction might also be critical for patient communication. Autonomy may be moderate and subject given regulatory constraints.

- E-commerce, on the other hand, would weigh interaction (personalization, style, user engagement) heavily, possibly combined with strong learning (recommendation models). Autonomy is rarely as critical because some tasks (shipping, billing) might be automated, but you don’t typically allow a shopping assistant to run entire business operations unsupervised.

By adjusting these weights, you ensure your final P value reflects the real importance each dimension has within your industry or application.

Case Study: AGI (Artificial General Intelligence)

The original title for this article was designing life experiences for and using artificial intelligence. This article has tried to create a scalable framework using formulas that can be used to classify, define, and create AI assistants. So far, the classes have described specialized AI that can grow from simple single-step tasks (Class 1) to near-autonomous, multi-domain expertise (Class 5).

But what if we want to explore the idea of true artificial general intelligence (AGI)?

I am talking about AI with the ability to handle an essentially infinite range of tasks, adapt to new situations and contexts on the go, learn autonomously at scale, and interact with humans or machines in a deeply natural way.

AGI’s Key Characteristics

Let’s proceed step by step. When we think about AGI, what are it’s key characteristics?

- Universal Task Complexity (U):

Unlike regular AI assistants, AGI aspires to operate in any domain. I am talking God-level expertise in any domain. For it to be AGI, it should be able to handle language translation, scientific research, creative problem-solving, robotics, and more—all of which far exceed the rules of a single domain. - Adaptive Contextual Awareness (A):

Another characteristic of AGI is that it must interpret context from a wide variety of sources—from social nuances to data directly from the physical environment—and be able to adjust its responses in ways that reflect a global understanding. - Autonomous Learning (L):

It is important for AGI to autonomously improve, and not just through labeled datasets in a single domain but across many domains—potentially discovering new things to do or knowledge on its own initiative without human help. - Rich Interaction (I):

One thing about humans is that we converse in complex, multifaceted ways. Any AGI that can truly match or exceed human communication should be able to handle text, speech, vision, and even emotional cues fluidly. - Full Autonomy (F):

Finally, AGI needs to be able to make independent decisions, weigh moral or ethical contexts, and carry out actions without constant human oversight.

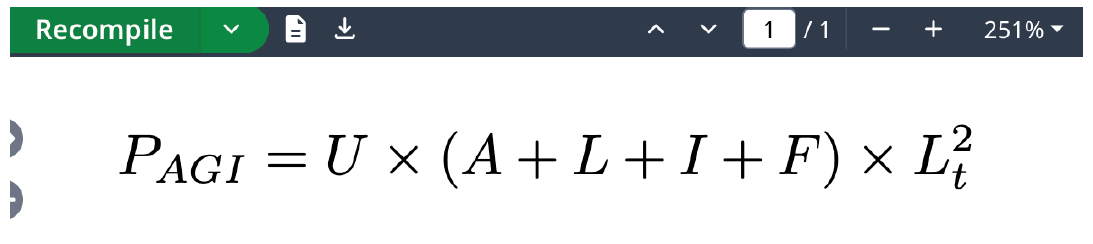

Following from this, I propose that the formula for AGI would be:

AGI Formula: PAGI

Using the variables we defined above, PAGI can be represented as:

- U = Universal Task Complexity, which represents the potential breadth of tasks that AGI is capable of. For regular assistants, we would cap this, but for AGI, U could theoretically be unlimited.

- A, L, I, F = are the key capabilities that AGI must excel in: adaptive context, learning, interaction, and full autonomy. Here we consider these simultaneously additive and interdependent.

- L²t = The text editor is preventing me from writing it well, but it looks like in the screenshot formula above. This expression means that we square the technology level to indicate that the hardware and software demands for AGI scale on an exponential basis. Handling a truly universal AI would require massive distributed systems, specialized accelerators, or even quantum computing.

Detailed Variable Definitions and Ranges

Below is a more mathematically explicit version of the proposed AGI formula, accompanied by recommended numeric ranges and definitions for each variable.The goal here is to make it as “plug-and-play” as possible while still reflecting the conceptual breadth of AGI. Think of this as a scoring system you can actually use to get a single numerical value.

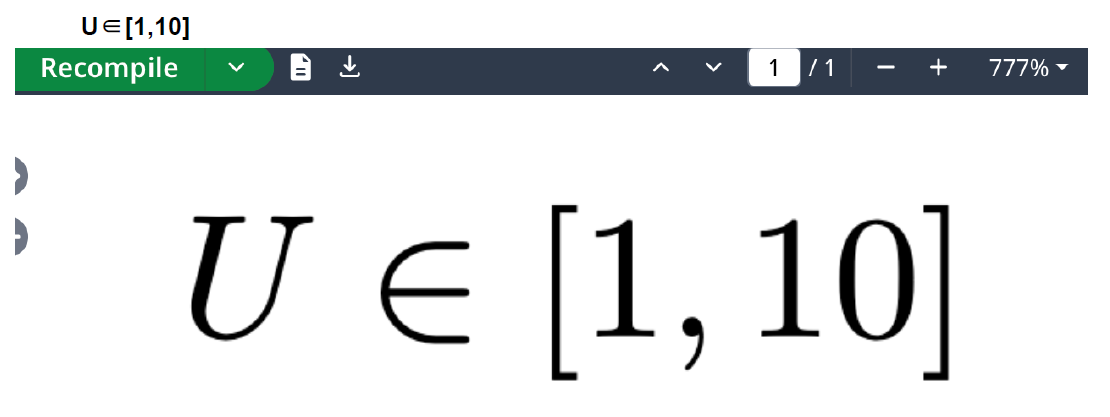

Universal Task Complexity (U)

Check above for definition

Range: A recommended integer or float scale from 1 to 10, where:

1 = The AI is effectively single-domain.

5 = It spans multiple large domains (e.g., language + vision + decision-making).

10 = It aspires to cover nearly everything a human could tackle, or even more.

Here:

Adaptive Contextual Awareness (A)

How well the assistant interprets and responds to changing or nuanced contexts across all domains (social, physical, cultural, etc.).

Range: 0 to 10, where:

0 = No real contextual adaptation.

5 = moderate ability (some domain-specific adaptation, but not broad).

10 = reads subtle context signals at near-human or superhuman levels.

Here;

A∈[0,10]

Autonomous Learning (L)

The assistant’s capacity to learn or self-improve without domain-specific labeling or continuous human oversight. Includes meta-learning (learning how to learn).

Range: 0 to 10, where:

0 = Purely rule-based, no self-learning.

5 = Can learn from limited feedback in a few areas.

10 = continuous, open-ended learning, discovering new tasks or knowledge at will.

Here;

L∈[0,10]

Rich Interaction (I)

The assitant’s ability to communicate naturally across multiple modalities (text, voice, visuals, emotional cues) and manage complex dialogues or collaborations.

Range: 0 to 10, where:

0 = Bare-bones Q&A with minimal language skills.

5 = Conversational in at least one modality, with some situational awareness.

10 = Engages in highly fluid, human-level (or beyond) conversation, possibly combining text, voice, visual understanding, emotional intelligence, etc.

Here;

I∈[0,10]

Full Autonomy (F)

The assitant’s independence in decision-making, including handling ethical or moral dilemmas, generating goals, and acting in unstructured environments without human intervention.

Range: 0 to 10, where:

0 = requires explicit instructions for every step.

5 = autonomy in routine tasks, but escalates complex or moral issues.

10 = Formulates and pursues its own goals, capable of self-directed actions even in new, ethically ambiguous territory.

Here;

F∈[0,10]

Technology Level (Lt)(L_{t})(Lt )

This reflects how advanced the hardware, software, and system architecture are—scalable cloud computing, specialized accelerators (GPUs, TPUs), quantum processors, robust robotics, and similar hardware.

Range: For AGI specifically, we could scale from 1 (bare minimum hardware) up to 5 or beyond for extremely advanced setups where:

1 = Standard consumer-level computing, single node.

3 = Distributed or large-scale HPC clusters with specialized ML hardware.

5 = Fusion of HPC, quantum computing, edge robotics, or other cutting-edge technologies.

Here;

Lt ∈[1,5(or higher for theoretical quantum/next-gen)]

Example

Let’s say we have an ambitious AI system with:

- U=8 (targets many domains, though not truly everything).

- A=7 (very good contextual awareness, but not flawless).

- L=6 (learns autonomously in multiple ways but is not fully self-evolving).

- I=8 (rich conversational/visual interaction).

- F=5 (It’s fairly autonomous but still calls for human supervision in truly uncertain areas).

- Lt =4 (advanced distributed computing, specialized ML hardware, but not full quantum or exotic HPC).

Steps:

- Sum up A+L+I+F=7+6+8+5=26.

- Square Lt . Here, Lt2 =42=16

- Multiply: PAGI =U×(A+L+I+F)×Lt2 =8×26×16.

- Compute:

8×26=208,208×16=3328.

PAGI =3328.

The score for the assistant at 3328 is quite high, which indicates a multi-domain, advanced-learning system with a robust (though not ultimate) tech stack. It’s not an absolutely maxed-out AGI (which could theoretically push these numbers far higher), but it’s well beyond typical specialized AI or single-domain systems.

Considerations & Extensions

Weighting: Let’s say we want to emphasize one area (e.g, autonomous learning, which is crucial), we could multiply L by 1.5 or 2.0 before adding them up. The same logic applies to U or to L²t.(Please check original formula screenshot for correct representation of L²t).

A possible variant under the weighting consideration might be:

PAGI =wU U×(wA A+wL L+wI I+wF F)×(wLt Lt )2.

Upper Limits: It is possible to expand Lt beyond 5 if we eventually develop technologies that are even more advanced than “Level E.” For instance, if we allow Lt =10 for purely hypothetical quantum-driven supercomputing, (Lt2 =100) dramatically boosts the score.

Ethical or Moral Reasoning: We can’t discuss AGI without touching the topic of ethics and morals. Some might decide that “Full Autonomy (F)” should be further split into separate dimensions: “Ethical Reasoning” or “Value Alignment.” These would then be added as extra terms/variables in (A+L+I+F+Ethics).

Practical Implementation: In real usage of AGI, it is important that there is a review board or a set of expert raters to assign values for the variables we defined — A, L, I, and F — because, let’s be honest, these are inherently subjective no matter how you cast them. U is also somewhat subjective, because how “universal” do you consider your tasks?

However, giving these a numeric scale (1–10) with guidelines for each step will help us grow performance in a scalable manner, develop effective strategies, and measure results.

The AGI formula: Reality and Criticism

While the classification system we have developed is straightforward for typical AI assistants, AGI introduces a whole different realm of complexity. By design, an AGI tries to unify or transcend many domains and:

- It might hold indefinite knowledge or process countless streams of sensory information.

- It relies on advanced, self-directed learning methods (sometimes referred to as meta-learning, where it learns how to learn new tasks).

- It’s not pinned to a single knowledge domain but can pivot from language translation to design engineering or creative storytelling.

The proposed AGI formula that I put forward eflects these challenges:

Of course, this formula is partly conceptual: true AGI is still more of a frontier topic than a commercial product. But I believe that thinking about it in this way going forward prepares us for the day we have to measure or rank the broad intelligence of a system in a consistent manner.

Below is a more mathematically explicit version of the AGI formula, accompanied by recommended numeric ranges and definitions for each variable.The goal is to make it as “plug-and-play” as possible while still reflecting the conceptual breadth of AGI. Think of this as a scoring system you can actually use to get a single numerical value.

Practical Considerations and Guidance

Choosing the Right Formula

Like I said earlier, the simple formula is best if you’re in the early design phase, or you just want a standard ranking for a batch of prototypes. It’s easy to compute and quick to compare.

Weighted Formula would apply if the domain you are designing for has critical dimensions. You could be building a system that absolutely must be context-aware above all else. Setting a higher weight on context ensures your final power score reflects that priority.

Watching for Over- or Under-Engineering

If your assistant is only Class 1 or 2, there’s no point spinning up a giant cluster or exploring quantum algorithms for it’s scripting. You’re better off with a simpler approach that meets the actual functional demand. Conversely, if you aim for Class 4 or 5 tasks—like real-time financial trading or city-scale resource management—a basic script approach (like Level B) will be hopelessly inadequate.

Evolving Over Time

Many development roadmaps start on a very small scale, then grow. For example:

Phase 1: Build a Class 2, Level B prototype to handle multi-step tasks with minimal logic.

Phase 2: Incorporate ML and external APIs (shifting to Class 3, Level C). Now the assistant can personalize user interactions better.

Phase 3: Aim for partial autonomy, real-time scaling, or domain expansions (Class 4 or 5, Level D).

This gradual approach will ensure that you don’t jump straight into complicated solutions without having the necessary functional underpinnings or data resources.

Parting Words

Building and evaluating generative assistants is mostly a game of balancing the tasks you want to accomplish with how advanced your technology approach is. The two-dimensional Class (1–5) vs. Level (A–E) framework gives you a quick but precise way to see where an assistant stands. This method provides clarity, regardless of whether you’re interacting with a basic FAQ bot (Class 1, Level A) or a multi-domain, near-human system (Class 5, Level D or E).

When you add the Power Formula (either simple or weighted), you get a numeric measure that can inform decision-making about development priorities, resource allocation, or comparisons among different systems. The weighted version is particularly handy when certain complexities carry more weight in your specific industry, whether it’s healthcare, finance, education, or something else.

The AGI case study also shows how this framework can adapt to even the most expansive vision of artificial intelligence. The universal task complexity, advanced contextual awareness, autonomous learning, rich interaction, and full autonomy of an AGI surpass the limits of existing classes. And while we’re still some distance from building a true AGI, this conceptual formula helps to underscore how many leaps in both hardware and methodology we likely need before an assistant can really do it all.

No matter where your AI assistant falls on this map, the combination of functional classes, technology levels, and power formulas offers a comprehensive blueprint for understanding, designing, and scaling AI systems in a structured way. And if one day we have the technology capacity for a system that truly does everything—AGI—this blueprint remains relevant and will remind you that function and technology must advance hand in hand.

Outsourcing specjalistów IT

Outsourcing specjalistów IT Team Leasing

Team Leasing Managed Services

Managed Services Usługi Amazon Web Services

Usługi Amazon Web Services Inżynieria

Inżynieria Work Package

Work Package Testing on demand

Testing on demand